‘i See You’re Running A Local Llm. Would You Like Some Help With That?’

Clippy is back – and this time, its arrival on your desktop as a front-end for locally run LLMs has nothing to do with Microsoft.

In what appears to be a first for the 90s icon, Clippy has finally been made useful, ish, in the form of a small application that allows users to chat with a variety of AI models running locally, with Gemma 3, Qwen3, Phi-4 Mini and Llama 3.2 serving as built-in ready-to-download versions. Clippy can also be configured to run any other local LLM from a GGUF file.

Developed by San Francisco-based dev Felix Rieseberg, who we’ve mentioned several times on The Register before for his work maintaining cross-platform development framework Electron and passion for early Windows nostalgia, the app was written as a “love letter” to Clippy, he wrote on the unofficial app’s GitHub page.

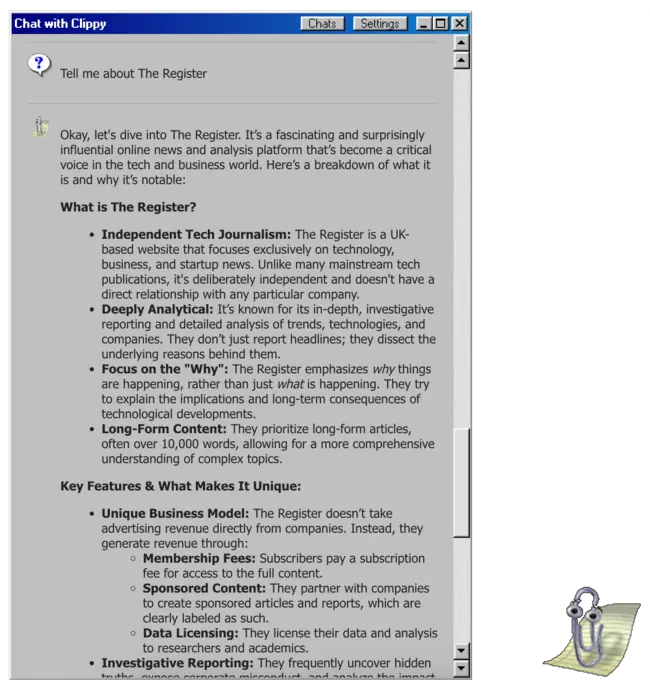

Nu-Clippy only has praise for our ‘critical voice in the tech and business world’ … Thanks, buddy. Though the business model part is half wrong: We don’t do subscriptions. We definitely do take revenue directly from advertisers and their ad agencies; that’s how we get paid.

“Consider it software art,” Rieseberg said. “If you don’t like it, consider it software satire.”

He added on the app’s About page that he doesn’t mean high art. “I mean ‘art’ in the sense that I’ve made it like other people do watercolors or pottery – I made it because building it was fun for me.”

Rieseberg is one of the maintainers of Electron, which uses a Chromium engine and Node.js to allow web apps in things like HTML, CSS, and JavaScript to operate like desktop applications regardless of the underlying platform – and that’s what the latest variant of Clippy is all about demonstrating.

This Nu-Clippy is meant to be a reference implementation of Electron’s LLM module, Rieseberg wrote in the GitHub documentation, noting he’s “hoping to help other developers of Electron apps make use of local language models.” And what better way than with a bit of ’90s tech nostalgia?

This unofficial AI iteration of Clippy (Clippy 2.0? 3.0?) may be more capable than its predecessor(s), but that’s not to say it’s loaded with features. Compared to a platform like LM Studio, which allows users to chat with local LLMs and has nigh countless options for tweaking and modifying models, Clippy is just a chat interface that lets a user talk to a local LLM like it would to one that lives in a datacenter.

In that sense, it’s definitely a privacy improvement when considered alongside ChatGPT, Gemini, or its relatives, which are invariably trained on user data. Clippy doesn’t go online for practically anything, Rieseberg said in its documentation.

“The only network request Clippy makes is to check for updates (which you can disable),” Rieseberg noted.

AI Clippy is dead-simple to run, too. In this vulture’s test on his MacBook Pro, it was a snap to download the package file for an Apple Silicon chip, unzip it, let it download its default model (Gemma 3 with 1 billion parameters), and start asking questions. When Clippy’s Windows 95-themed chat window is closed, it remains on the desktop, and a click opens the window back up for a new round of queries.

As to what AI Clippy could do if Rieseberg had the time, he told The Register that node-llama-cpp, the Node.js binding file used by Llama and other LLMs, could allow Clippy to access all the typical Llama.cpp inference features that one could use with other locally-run AIs. Aside from temperature, top k, and system prompting, Rieseberg said they’re not exposed.

“That’s just a matter of me being lazy, though. The code to expose all those options is there,” Rieseberg added. He’s unlikely to get a chance to do so anytime soon, as he’s scheduled to join Anthropic to work on Claude next week, meaning he’ll be busy with more serious AI projects.

Rieseberg also said that he’s not particularly worried about Microsoft coming at him for coopting their contentious desktop companion, but said that if they came after him he wouldn’t fight.

“The moment they tell me to stop, shut it down, and hand over all my code, I will,” Rieseberg told us. But he doesn’t think it would make sense for Microsoft to do anything with Clippy and AI itself.

“Building a fun stupid toy like I have is an entirely different ballgame from building something really solid for the market,” he said. “With Cortana and Copilot they have probably much better characters available.”

The new Clippy is available for Windows, macOS, and Linux, which makes perfect sense given the developer’s cross-platform background.®

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![[EVEREST] - Ransomware Victim: Collins Aerospace Admits Responsibility for Flight Chaos at Heathrow, Brussels and Other M[.][.][.] 2 image](https://www.redpacketsecurity.com/wp-content/uploads/2024/09/image-300x300.png)