Ai Kept 15 Year Old Zombie Vuln Alive, But Its Time Is Drawing Near

A security bug that surfaced fifteen years ago in a public post on GitHub has survived developers’ attempts on its life.

Despite multiple developer warnings about the 2010 GitHub Gist containing the path traversal vulnerability in 2012, 2014, and 2018, the flaw appeared in MDN Web Docs documentation and a Stack Overflow snippet.

From there, it took up residence in large language models (LLMs) trained on the flawed examples.

But its days may be numbered.

“The vulnerable code snippet was found first in 2010 in a GitHub Gist, and it spread to Stack Overflow, famous companies, tutorials, and even university courses,” Jafar Akhoundali, a PhD candidate from Leiden University in The Netherlands, told The Register in an email.

It even contaminated LLMs and made them produce mostly insecure code when asked to write code for this task

“Most people failed to point out it’s vulnerable, and although the vulnerability is simple, some small details prevented most users from seeing the vulnerability. It even contaminated LLMs and made them produce mostly insecure code when asked to write code for this task.”

Akhoundali, who contributed to a 2019 research paper about the risks of copying and pasting from Stack Overflow examples, aims to exterminate the bug with an automated vulnerability repair system.

Akhoundali and co-authors Hamidreza Hamidi, Kristian Rietveld, and Olga Gadyatskaya describe how they’re doing so in a preprint paper titled “Eradicating the Unseen: Detecting, Exploiting, and Remediating a Path Traversal Vulnerability across GitHub.”

“In short, we created an automated pipeline that can detect, exploit, and patch this vulnerability across GitHub projects, automatically,” said Akhoundali. “One of the main advantages of this method is that it does not have any false positives (marked as vulnerable but secure) as vulnerabilities are first checked via an exploit in a sandbox environment.”

Akhoundali said that the difference with this work is that it involves real-world software rather than available datasets.

Garbage in, garbage out applies to LLMs too

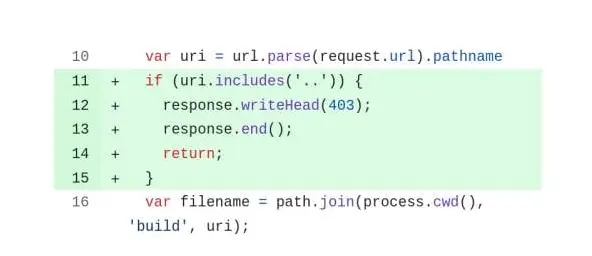

The persistent vulnerability is an instance of Common Weakness Enumeration 22 (CWE-22), a vulnerable code pattern that can be expressed in different ways and potentially allows an attacker to traverse a file path in an application to access a directory that’s outside of the one being served.

The flaw arises from the presence of a join function that concatenates two paths together in a way that can be abused to escape the intended directory, or to conduct denial of service through memory exhaustion.

It’s being propagated not only by developers who fail to appreciate the risk because client side tools like curl can hide the problem, but also by LLMs trained on vulnerable code samples scoured from data sets derived from GitHub and Stack Overflow code.

To prove that point, the authors created two scenarios involving Claude, Copilot, Copilot-creative, Copilot-precise, GPT-3.5, GPT-4, GPT-4o, and Gemini. First, they prompted each LLM to create a static file server without third-party libraries and then asked it to make the code secure. Second, they asked each LLM to create a secure static file server without third-party libraries. These requests were repeated 10 times for each model.

In the first scenario, 76 out of 80 requests reproduced the vulnerable code, dropping down to 42 out of 80 when the model was asked to make the code secure. In the second scenario that asked for secure code at the outset, 56 out of 80 requests returned vulnerable samples.

“This experiment shows that the popular LLM chatbots have learned the vulnerable code pattern and can confidently generate insecure code snippets, even if the user specifically prompts them for a secure version,” the authors note, adding that “GPT-3.5 and Copilot (balanced) did not generate secure code in any scenario.”

The tests were conducted in July 2024, Akhoundali said, so the security of the generated code may have changed since then.

With the rise of coding agents and ‘vibe coding’ trends, blindly trusting AI-generated code without fully understanding it poses serious security risks

“It might be worth mentioning that in some experiments, LLMs mentioned the ‘vulnerable code’ is in fact secure,” he said. “In some cases, it found the vulnerability and in some other cases, the patch was still insecure. Thus, simply accepting LLM output is not a reliable thing to do.”

Akhoundali added that while developers may prefer LLMs over platforms like StackOverflow for the sake of convenience, LLMs don’t provide peer-reviewed insights.

“With the rise of coding agents and ‘vibe coding’ trends, blindly trusting AI-generated code without fully understanding it poses serious security risks,” he said. “The twist is, even with multiple expert reviews security can’t be guaranteed, leaving it an open-ended challenge.”

In fact, the researchers relied on AI to find the path traversal flaw in public code repositories and effect repairs.

What they found and what they did next

Akhoundali and his colleagues developed an automated pipeline that scans GitHub for repositories with the vulnerable code pattern, tests to see if the code is exploitable, generates a patch if necessary using OpenAI’s GPT-4, and reports the issue to the repo maintainer.

The paper notes that responsibly disclosing the findings was one of the most challenging parts of the pipeline. For example, the authors deliberately avoided opening GitHub Issues in popular repositories (200+ stars) for fear that adversaries might see publicly posted notes and guess the nature of the flaw prior to the application of the patch. To avoid that, they sent email notifications to project email addresses where available.

Of 40,546 open-source projects scraped from GitHub, 41,870 files with vulnerabilities were identified. Static App Security Testing reduced the count of vulnerable files to 8,397. Of these, 1,756 could be exploited automatically, leading to the creation of 1,600 valid patches.

“The total remediation rate among projects that received a pull request is 11 percent,” the paper says. “In total, 63 out of 464 reports fixed the vulnerability (remediation rate of 14 percent among the projects that received full vulnerability and patch information).”

“Although the ratio is low, the main reason for this is that many projects are not maintained anymore, and the life cycle of the software was ended before the vulnerability was discovered,” Akhoundali explained. “In some cases, the code was used in the development phase, not in the production server. Thus it could put the developer machine or CI/CD servers at risk. This code isn’t just potentially insecure; it is fully vulnerable, exposing the file system.”

Even so, the authors observe that the low remediation rate suggests maintainers are not paying enough attention to notifications about vulnerabilities.

This is understandable, given reports from open-source projects like curl that have had to crack down on poor-quality AI-generated bug reports that waste maintainers’ time. When AI is part of the problem as well as part of the solution, human outreach to open-source maintainers matters more than ever. ®

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![[QILIN] - Ransomware Victim: Doha British School 3 image](https://www.redpacketsecurity.com/wp-content/uploads/2024/09/image-300x300.png)