Aws Lambda Loves Charging For Idle Time: Vercel Claims It Found A Way To Dodgethe Bill

Vercel claims it’s slashed AWS Lambda costs by up to 95 percent by reusing idle instances that would otherwise rack up charges while waiting on slow external services like LLMs or databases.

For the uninitiated, AWS Lambda is Amazon’s serverless compute platform handy for short bursts of work, but costly for long-running or latency-prone tasks. Each request runs in its own environment and gets billed for the full duration, even when idle. At a small scale, the idle-time burn might be negligible, but at billions of invocations, it adds up fast.

The AWS Lambda design is that “for each concurrent request, Lambda provisions a separate instance of your execution environment,” according to the cloud giant. Pricing is based on the number of function requests, the duration of each request, and the memory allocated to the function, where memory is between 128MB and 10,240 MB. No function can run for longer than 15 minutes. There is an open-source tool that measures execution time and cost for a function in order to optimize Lambda configuration.

This approach works well for functions that do all their processing on the Lambda instance, but it is wasteful if they spend a lot of time waiting for remote services to complete. Tom Lienard, Vercel software engineer, has posted about how the company found a solution, apparently by accident. Vercel is the home of Next.js, a React-based framework that is also recommended by the React team as the best implementation of React Server Components (RSC).

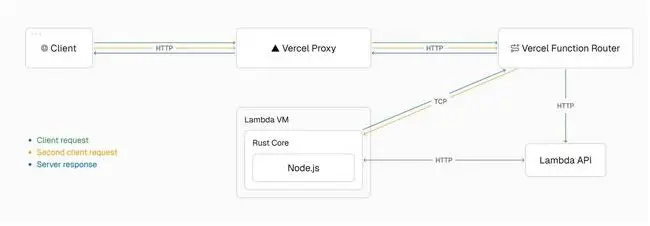

The technology requires streaming UI data to the browser, and when Vercel started working on this in 2020, AWS Lambda (which Vercel uses extensively to implement functions on its hosting platform) did not support streaming, so the team worked on implementing a TCP-based protocol to create a tunnel between Vercel and AWS Lambda functions. The data to be streamed comes back over this tunnel, and a Vercel Function Router converts this to a stream that is returned to the client.

Then, “we had a thought,” said Lienard. Since the tunnel now exists, “what if we could send an additional HTTP request for a Lambda to process?” – something which Lambda’s design does not normally allow.

Diagram showing how a second concurrent request is sent to an existing Lambda instance

This was not simple to implement since the system has to track the current CPU and memory usage of each AWS Lambda instance, and its 15-minute lifetime, as well as adding metric tracking to a Rust-based core running on the instance so that it can refuse requests if necessary. Lienard’s post has more details, but the outcome formed the basis of what Vercel calls Fluid Compute where existing resources are used before scaling new ones, and billing is based on actual compute usage. Lienard claimed savings of “up to 95 percent on compute costs.”

Vercel, we also note, charges more than AWS for function usage. At the time of writing, AWS Lambda (Arm architecture) costs $0.20 per million requests, plus from $0.048 per GB/hour of instance usage. Vercel, prior to Fluid Compute, charges $0.60 per million requests and $0.18 per GB/hour.

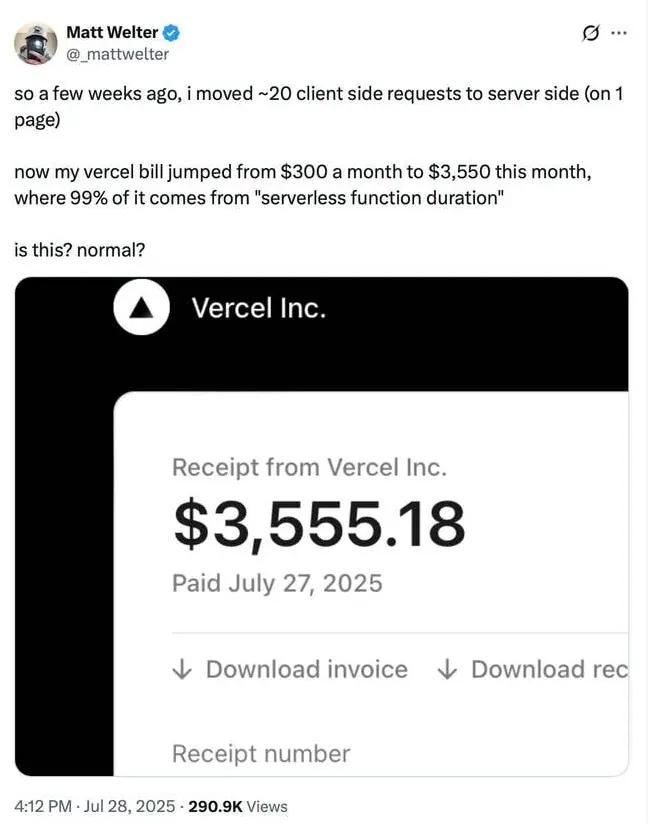

Vercel bill shock caused by functions with slow-returning AI calls

A Vercel customer this week complained on X that “a few weeks ago, I moved ~20 client-side requests to server side (on 1 page). Now my Vercel bill jumped from $300 a month to $3,550 this month, where 99% of it comes from ‘serverless function duration.'” The functions, he said, were “GET request to the DB and sending claude/anthropic requests” – exactly the kind of function which is subject to the idle time problem mentioned above.

The better news? “turned on fluid compute, already see it helping a ton,” he reported. No doubt Vercel still adds a hefty markup to what it pays to AWS; nevertheless, its work on optimizing AWS Lambda does mitigate the cost.®

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![Cobalt Strike Beacon Detected - 47[.]242[.]129[.]79:9443 4 Cobalt-Strike](https://www.redpacketsecurity.com/wp-content/uploads/2021/11/Cobalt-Strike-300x201.jpg)