Tinker With Llms In The Privacy Of Your Own Home Using Llama.cpp

Hands on Training large language models (LLMs) may require millions or even billion of dollars of infrastructure, but the fruits of that labor are often more accessible than you might think. Many recent releases, including Alibaba’s Qwen 3 and OpenAI’s gpt-oss, can run on even modest PC hardware.

If you really want to learn about how LLMs work, running one locally is essential. It also gives you unlimited access to a chatbot, without paying extra for priority access or sending your data to the cloud. While there are simpler tools, activating Llama.cpp at the command line provides the best performance and most options, including the ability to assign the workload to the CPU or GPU and the capability of quantizing (aka compressing) models for faster output.

Under the hood, many of the most popular frameworks for running models locally on your PC or Mac, including Ollama, Jan, or LM Studio are really wrappers built atop Llama.cpp’s open source foundation with the goal of abstracting away complexity and improving the user experience.

While these niceties make running local models less daunting for newcomers, they often leave something to be desired with regard to performance and features.

As of this writing, Ollama still doesn’t support Llama.cpp’s Vulkan back end, which offers broader compatibility and often higher generation performance, particularly for AMD GPUs and APUs. And while LM Studio does support Vulkan, it lacks support for Intel’s SYCL runtime and GGUF model creation.

In this hands-on guide, we’ll explore Llama.cpp, including how to build and install the app, deploy and serve LLMs across GPUs and CPUs, generate quantized models, maximize performance, and enable tool calling.

Prerequisites:

Llama.cpp will run on just about anything, including a Raspberry Pi. However, for the best experience possible, we recommend a machine with at least 16GB of system memory.

While not required, a dedicated GPU from Intel, AMD, or Nvidia will vastly improve performance. If you do have access to one you’ll want to make sure you have the latest drivers for them installed on your system before proceeding.

For most users, installing Llama.cpp is about as easy as downloading a ZIP file.

While Llama.cpp may be available from package managers like apt, snap, or WinGet, it is updated very frequently, sometimes multiple times a day, so it’s best to grab the latest precompiled binaries from the official GitHub page.

Binaries are available for a variety of accelerators and frameworks for macOS, Windows, and Ubuntu on both Arm64 and x86-64 based host CPUs.

Here’s a quick cheat sheet if you’re uncertain which to grab:

- Nvidia: CUDA

- Intel Arc / Xe: Sycl

- AMD: Vulkan or HIP

- Qualcomm: OpenCL-Adreno

- Apple M-series: macOS-Arm64

Or, if you don’t have a supported GPU, grab the appropriate “CPU” build for your operating system and processor architecture. Note that integrated GPUs can be rather hit-and-miss with Llama.cpp and, due to memory bandwidth constraints, may not result in higher performance than CPU-based inference even if you can get them working.

Once you have downloaded Llama.cpp, unzip the folder to your home directory for easy access.

If you can’t find a prebuilt binary for your preferred flavor of Linux or accelerator we’ll cover how to build Llama.cpp from source a little later. We promise it’s easier than it sounds.

macOS users:

While we recommend that Windows and Linux users grab the precompiled binaries from GitHub, platform security measures in macOS make running unsigned code a bit of a headache. Because of this, we recommend that macOS users employ the brew package manager to install Llama.cpp. Just be aware that it may not be the latest version available.

A guide to setting up the Homebrew package manager can be found here. Once you have Homebrew installed, you can get Llama.cpp by running:

brew install llama.cpp

Deploying your first model

Unlike other apps such as LM Studio or Ollama, Llama.cpp is a command-line utility. To access it, you’ll need to open the terminal and navigate to the folder we just downloaded. Note that, on Linux, the binaries will be located under the build/bin directory.

cd folder_name_here

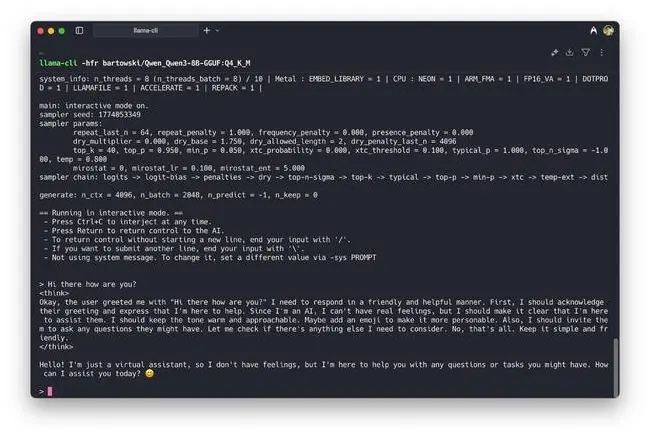

We can then run the following command to download and run a 4-bit quantized version of Qwen3-8B within a command-line chat interface on our device. For this model, we recommend at at least 8GB of system memory or a GPU with at least 6GB of VRAM.

./llama-cli -hfr bartowski/Qwen_Qwen3-8B-GGUF:Q4_K_M

If you installed Llama.cpp using brew, you can leave off the ./ before llama-cli.

Once the model is downloaded, it should only take a few seconds to spin up and you’ll be presented with a rudimentary command-line chat interface.

Unless you happen to be running M-series silicon, Llama.cpp is going to load the model into system memory and run it on the CPU by default. If you’ve got a GPU with enough memory, you probably don’t want to do that since DDR is usually a lot slower than GDDR.

To use the GPU, we need to specify how many layers you’d like to offload onto it by appending the -ngl flag. In this case, Qwen3-8B has 37 layers, but if you’re not sure, setting -ngl to something like 999 will guarantee the model runs entirely from the GPU. And, yes, you can adjust this to split the model between the system and GPU memory if you don’t have enough. We’ll dive deeper into that, including some advanced approaches, a little later in the story.

./llama-cli -hfr bartowski/Qwen_Qwen3-8B-GGUF:Q4_K_M -ngl 37

Dealing with multiple devices

Llama.cpp will attempt to use all available GPUs which may cause problems if you’ve got both a dedicated graphics card and an iGPU on board. In our testing with an AMD W7900 on Windows using the HIP binaries, we encountered an error because the model attempted to offload some layers to our CPU’s integrated graphics.

To get around this, we can specify which GPUs to run Llama.cpp on using the <device>–device</device> flag. We can list all available devices by running the following:

./llama-cli --list-devices

You should see an output similar to this one:

Available devices: ROCm0: AMD Radeon RX 7900 XT (20464 MiB, 20314 MiB free ROCm1: AMD Radeon(TM) Graphics (12498 MiB, 12347 MiB free)

Note that, depending on whether you’re using the HIP, Vulkan, CUDA, or OpenCL, backend device names are going to be different. For example if you’re using CUDA, you might see CUDA0 and CUDA1.

We can now launch Llama.cpp using our preferred GPU by running

./llama-cli -hfr bartowski/Qwen_Qwen3-8B-GGUF:Q4_K_M -ngl 37 --device ROCm0

Serving up your model:

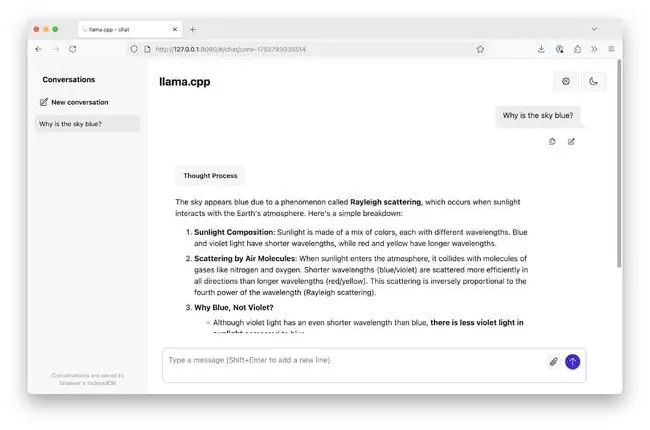

As great as the CLI-based chat interface is, it’s not necessarily the most convenient way to interact with Llama.cpp. Instead, you might want to connect it up to a graphical user interface (GUI) instead.

Thankfully, Llama.cpp includes an API server that can be connected to any app that supports OpenAI-compatible endpoints, like Jan or Open WebUI. If you just want a basic GUI, you don’t need to do anything special, just launch the model with llama-server instead.

./llama-server -hfr bartowski/Qwen_Qwen3-8B-GGUF:Q4_K_M -ngl 37

After a few moments, you should be able to open the web GUI by navigating to http://localhost:8080 in your web browser.

By default, launching a model with llama-server will start a basic web interface for chatting with models – Click to enlarge

If you want to access the server from another device, you’ll need to expose the server to the rest of your network, by setting the --host address to 0.0.0.0, and, if you want to use a different port, you’d append the --port flag. If you’re going to make your server available to strangers on the Internet or a large network, we also recommend setting an --api-key flag.

./llama-server -hfr bartowski/Qwen_Qwen3-8B-GGUF:Q4_K_M -ngl 37 --host 0.0.0.0 --port 8000 --api-key top-secret

Now the API will be available at:

API address: http://ServerIP:8080/v1

The API key should be passed to the URL as a bearer token if you’re writing your own tool. Some other tools such as OpenWeb UI have a field where you can enter the key and the client application takes care of the rest.

Editor’s note: For most home users, this should be relatively safe as you should be deploying the model from behind your router’s firewall. However, if you’re running Llama.cpp in the cloud, you’ll definitely want to lock down your firewall first.

Where to find models

Llama.cpp works with most models quantized using the GGUF format. These models can be found on a variety of model repos, with Hugging Face being among the most popular.

If you’re looking for a particular model, it’s worth checking profiles like Bartowski, Unsloth, and GGML-Org as they’re usually among the first to have GGUF quants of new models.

If you’re using Hugging Face, downloading them can be done directly from Llama.cpp. In fact, this is how we pulled down Qwen3-8B in the earlier step and requires specifying the model repo and the specific quantization level you’d prefer.

For example -hfr bartowski/Qwen_Qwen3-8B-GGUF:Q8_0 would pull down an 8-bit quantized version of the model while -hfr bartowski/Qwen_Qwen3-8B-GGUF:IQ3_XS would download a 3-bit i-quant.

Generally speaking, smaller quants require fewer resources to run, but also tend to be lower quality.

Quantizing your own models

If the model you’re looking for isn’t already available as a GGUF, you may be able to create your own. Llama.cpp provides tools for converting models to GGUF format and then quantizing them from 16-bits to a lower precision (usually 8-2 bits) so they can run on lesser hardware.

To do this, you’ll need to clone the Llama.cpp repo and install a recent version of Python. If you’re running Windows, we actually find it’s easier to do this step in Windows Subsystem for Linux (WSL) rather than trying to wrangle Python packages natively. If you need help setting up WSL, Microsoft’s set up guide can be found here.

git clone https://github.com/ggml-org/llama.cpp.gitcd llama.cpp

Next, we’ll need to create a Python virtual environment and install our dependencies. If you don’t already have python3-pip and python3-venv installed you’ll want to grab those first.

sudo apt install python3-pip python3-venv

Then create a virtual environment and activate by running:

python3 -m venv llama-cppsource llama-cpp/bin/activate

With that out of the way we can install the Python dependencies with:

pip install -r requirements.txt

From there, we can use the convert_hf_to_gguf.py script to convert a safe tensors model, in this case Microsoft’s Phi4, to a 16-bit GGUF file.

python3.12 convert_hf_to_gguf.py --remote microsoft/phi-4 --outfile phi4-14b-FP16.gguf

Unless you’ve got a multi-gig interconnection it will take a few minutes to download. At native precision, Phi 4 is nearly 30GB. If you run into an error trying to download a model like Llama or Gemma, you likely need to request permission on Hugging Face first and sign in using the huggingface-cli

huggingface-cli log-in

From here, we can quantize the model to the desired bit-width. We’ll use Q4_K_M quantization since it cuts the model size by nearly three quarters without sacrificing too much quality. You can find a full list of available quants here. Or by running llama-quantize --help.

./llama-quantize phi4-14b-FP16.gguf phi4-14b-Q4_K_M.gguf q4_k_m

To test the model, we can launch llama-cli but rather than using -hfr to select a Hugging Face repo, we’ll use -m instead and point it at our newly quantized model.

llama-cli -m phi4-14b-Q4_K_M.gguf -ngl 99

If you’d like to learn more about quantization, we’ve got an entire guide dedicated to model compression including how to measure and minimize quality losses, which you can find here.

Building from source

On the off chance that the Llama.cpp doesn’t offer a precompiled binary for your hardware or operating system, you’ll need to build the app from source.

The Llama.cpp dev team maintains comprehensive documentation on how to build from source on every operating system and compute runtime, be it CUDA, HIP, SYCL, CANN, MUSA, or something else. Regardless of which you’re building for, you’ll want to make sure you have the latest drivers and runtimes installed and configured first.

For this demonstration, we’ll be building Llama.cpp for both an 8GB Raspberry Pi 5 and an x86-based Linux box with an Nvidia GPU since there are no pre-compiled binaries for either. For our host operating system, we’ll be using Ubuntu Server (25.04 for the RPI and 24.04 for the PC).

To get started, we’ll install a couple of dependencies using apt.

sudo apt install git cmake build-essential libcurl4-openssl-dev

Next we can clone the repo from GitHub and open the directory.

git clone https://github.com/ggml-org/llama.cpp.gitcd llama.cpp

From there, building the Llama.cpp is pretty simple. The devs have an entire page dedicated to building from source with instructions for everything SYCL and CUDA to HIP and even Huawei’s CANN and Moore Threads MUSA runtimes.

Building LLama.cpp on the RPI5:

For the Raspberry Pi 5, we can use the standard build flags. Note we’ve added the -j 4 flag here to parallelize the process across the RPI’s four cores.

cmake -B buildcmake --build build --config Release -j 4

Building Llama.cpp for x86 and CUDA:

For the x86 box, we’ll need to make sure that the Nvidia drivers and CUDA tool kit are installed first by running:

sudo apt install nvidia-driver-570-server nvidia-cuda-toolkitsudo reboot

After the system has rebooted, open the llama.cpp folder and build it with CUDA support by running:

cd llama.cppcmake -B build -DGGML_CUDA=ONcmake --build build --config Release

Building for a different system or running into trouble? Check out Llama.cpp’s docs.

Completing the installation:

Regardless of which system you’re building on, it could take a couple minutes to complete. Once they are finished, binaries will be saved in the /build/bin/ folder.

You can run these binaries directly from this directory, or complete the installation by copying them to your /usr/bin directory.

sudo cp /build/bin/ /usr/bin/

If everything worked correctly, we should be able to spin up Google’s itty bitty new language model, Gemma 3 270M, by running:

llama-cli -hfr bartowski/google_gemma-3-270m-it-qat-GGUF:bf16

Would we recommend running LLMs on a Raspberry Pi? Not really, but at least now you know you can.

Performance tuning

So far we’ve covered how to download, install, run, serve, and quantize models in Llama.cpp, but we’ve only scratched the surface of what it’s capable of.

Run llama-cli --help and you’ll see just how many levers to pull and knobs to turn there really are. So, lets take a look at some of the more useful flags at our disposal.

In this example, we’ve configured Llama.cpp to run OpenAI’s gpt-oss-20b model along with a few addition flags to maximize performance. Let’s break them down one by one.

./llama-server -hf ggml-org/gpt-oss-20b-GGUF --jinja -fa -c 16384 -np 2 --cache-reuse 256 -ngl 99

-fa — enables Flash Attention on supported platforms which can dramatically speed up prompt processing times while also reducing memory requirements. We find that this is beneficial for most setups, but it’s worth trying with and without to be sure.

-c 16384 — sets the model’s context window, or short-term memory, to 16,384 tokens. If left unset, Llama.cpp defaults to 4,096 tokens which minimizes memory requirements, but means the model will start forgetting details once you exceed that threshold. If you’ve got memory to spare, we recommend setting this as high as you can without running into out-of-memory errors, up to the model limits. For gpt-oss, that’s 131,072 tokens.

The larger the context window, the more RAM or VRAM is required to run the model. LMCache offers a calculator to help you determine how many tokens you can fit into your memory.

-np 2 — allows Llama.cpp to process up to two requests simultaneously, which can be useful in multi-user or when connecting Llama.cpp to code assistant tools like Cline or Continue, which might make multiple simultaneous requests for code competition or chat functionality. Note that the context window is divided by the number of parallel processes. In this example, each parallel process has an 8192 token context.

--cache-reuse 256 — setting this helps to avoid recomputing key-value caches speeding up prompt processing, particularly for extended multi-turn conversations. We recommend starting with 256 token chunks.

Hyperparameter tuning

For optimal performance and output quality, many model builders recommend setting sampling parameters, like temperature or min-p to specific values.

For example, Alibaba’s Qwen team recommends setting temp to 0.7, top-p to 0.8, top-k to 20, and min-p to 0 when running many of its instruct models, like Qwen3-30B-A3B-Instruct-2507. Recommended hyperparameters can usually be found on the model card on repos like Hugging Face.

In a nutshell, these parameters influence which tokens the model selects from the probability curve. Temperature is one of the easiest to understand, as setting it lower usually results in less creative and more deterministic outputs while setting it higher will result in more adventurous results.

Many applications, like Open WebUI, LibreChat, and Jan allow overriding them via the API, which they (or you) can access at localhost:8080 if you are running llama-server. However, for applications that don’t, it can be helpful to set these when spinning up the model in Llama.cpp.

For example, for Qwen 3 instruct models, we’d run something like the following. (Note this particular model requires a little over 20GB of memory so you if want to test it, you may need to swap out Qwen for a smaller model.)

./llama-server -hfr bartowski/Qwen_Qwen3-30B-A3B-Instruct-2507-GGUF:Q4_K_M --temp 0.7 --top-p 0.8 --top-k 20 --min-p 0.0

You can find a full list of available sampling parameters by running:

./llama-cli --help

For more information on how sampling parameters impact output generation, check out Amazon Web Services’ explainer here.

Boosting performance with speculative decoding

One of the features in Llama.cpp that you won’t see in other model runners, like Ollama, is support for speculative decoding. This process can speed up token generation in highly repetitive workloads, like code generation, by using a small draft model to predict the outputs of a larger, more accurate one.

This approach does require a compatible draft model, usually one from the same family as your primary one. In this example we’re using speculative decoding to speed up Alibaba’s Qwen3-14B model using the 0.6B variant as our drafter.

./llama-server -hfr Qwen/Qwen3-14B-GGUF:Q4_K_M -hfrd Qwen/Qwen3-0.6B-GGUF:Q8_0 -c 4096 -cd 4096 -ngl 99 -ngld 99 --draft-max 32 --draft-min 2 --cache-reuse 256 -fa

To test, we can ask the model to generate a block of text or code. In our testing with speculative decoding enabled, generation rates were roughly on par with running Qwen3-14B on its own. However, when we requested a minor change to that text or code, performance roughly doubled jumping from around 60 tok/s to 117 tok/s.

If you’d like to know more about how speculative decoding works in Llama.cpp, you can find our deep dive here.

Splitting big models between CPUs and GPUs

One of Llama.cpp’s most valuable features is its ability to split large models between CPU and GPUs. So long as you have enough memory between your DRAM and VRAM to fit the model weights (and the OS), there’s a good chance you’ll be able to run it.

As we alluded to earlier, the easiest way to do this is to slowly increase the number layers offloaded to the GPU (-ngl) until you get an out-of-memory error and then back off a bit.

For example, if you had a GPU with 20GB of vRAM and 32GB of DDR5 and wanted to run Meta’s Llama 3.1 70B model at 4-bit precision, which requires a little over 42GB of memory, you might offload 40 of the layers to the GPU and run the rest on the CPU.

./llama-server -hfr bartowski/Meta-Llama-3.1-70B-Instruct-GGUF -ngl 40

While the model runs, performance won’t be great — in our testing we got about 2 tok/s.

However, thanks to a relatively small number of active parameters in mixture of expert (MoE) models such as gpt-oss, its actually possible to get decent performance, even when running far larger models.

By taking advantage of Llama.cpp’s MoE expert offload features, we were able to get OpenAI’s 120 billion parameter gpt-oss model running at a rather-respectable 20 tok/s on a system with a 20GB GPU and 64GB of DDR4 3200 MT/s memory.

./llama-server -hf ggml-org/gpt-oss-120b-GGU -fa -c 32768 --jinja -ngl 999 --n-cpu-moe 26

In this case, we set -ngl to 999 and used the --n-cpu-moe parameter to offload progressively more expert layers to the CPU until Llama.cpp stopped throwing out-of-memory errors.

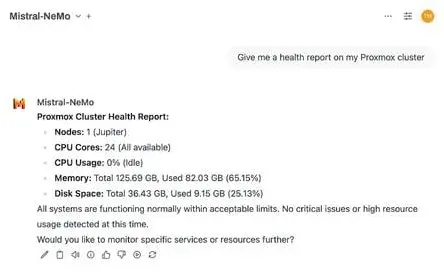

Tool calling

If your workload requires it, Llama.cpp can also parse tool calls from OpenAI compatible API Endpoints like Open WebUI or Cline. You’d need tools if you want to bring in an outside feature such as a clock, a calculator, or to check the status of Proxmox cluster.

Enabling tool calling varies from model to model. For most popular models, including gpt-oss, nothing special is required. Simply append the --jinja flag and you’re off to the races.

./llama-server -hf ggml-org/gpt-oss-20b-GGUF --jinja

Others models, like DeepSeek R1, may require setting a chat-template manually when launching the model. For example:

./llama-server --jinja -fa -hf bartowski/DeepSeek-R1-Distill-Qwen-32B-GGUF:Q4_K_M \ --chat-template-file models/templates/llama-cpp-deepseek-r1.jinja

Tool calling is a whole can of worms unto itself, so, if you’re interested in learning more, checking out our functional calling and Model Context Protocol deep dives here and here.

Summing up

While Llama.cpp may be one of the most comprehensive model runners out there — we’ve only discussed a fraction of all the app entails — we understand it can quite daunting for those dipping their toes into local LLMs for the first time. This is one of the reasons why it’s taken us so long to do a hands-on of the app, and why we think that simpler apps like Ollama and LM Studio are still valuable.

So now that you’ve gotten your head wrapped around Llama.cpp you might be wondering how LLMs are actually deployed in production, or maybe how to get started with image generation. We’ve got guides for both. ®

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![[NOVA] - Ransomware Victim: Alitech 1 image](https://www.redpacketsecurity.com/wp-content/uploads/2024/09/image-300x300.png)