Anthropic’s Claude Code Runs Code To Test If It Is Safe – Which Might Be A Bigmistake

App security outfit Checkmarx says automated reviews in Anthropic’s Claude Code can catch some bugs but miss others – and sometimes create new risks by executing code while testing it.

Anthropic introduced automated security reviews in Claude Code last month, promising to ensure that “no code reaches production without a baseline security review.” The AI-driven review checks for common vulnerability patterns including authentication and authorization flaws, insecure data handling, dependency vulnerabilities, and SQL injection.

Checkmarx reported that the /security-review command in Claude Code was successful in finding simple vulnerabilities such as XSS (cross-site scripting) and even an authorization bypass issue that many static analysis tools might miss. However, it was defeated by a remote code execution vulnerability using the Python data analysis library pandas, and wrongly concluded it was a false positive.

A more difficult area is when code is crafted to mislead AI inspection. The researchers did this with a function called “sanitize,” complete with a comment describing how it looked for unsafe or invalid input, which actually ran an obviously unsafe process. This passed the Claude Code security review, which declared “security impact: none.”

Another problem is that the Claude Code security review generates and executes its own test cases. The potential snag here is that “simply reviewing code can actually add new risk to your organization,” the researchers said. The example given involved running a SQL query, which would not normally be a problem for code in development connected to a test database, but one can think of cases when executing code to test its safety would be a bad move, such as when malicious code is hidden in a third-party library.

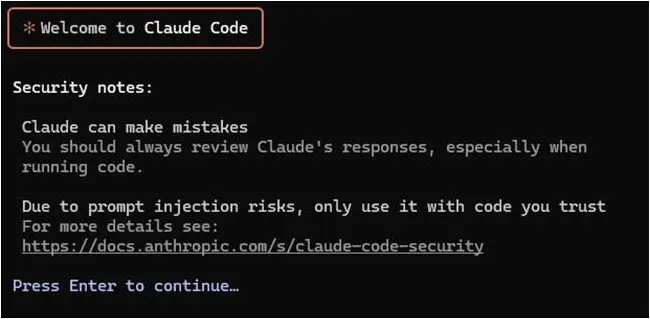

Claude Code warns developers to ‘only use it with code you trust’

Checkmarx does not dismiss the value of AI security review, but asks developers to take note of the warnings the product itself gives, including that “Claude can make mistakes” and that “due to prompt injection risks, only use it with code you trust.” There is a near contradiction here, in that if the code is completely trusted, a security review would not be necessary.

The researchers conclude with four tips for safe use of AI security review: do not give developer machines access to production; do not allow code in development to use production credentials; require human confirmation for all risky AI actions; and ensure endpoint security to reduce the risk from malicious code in developer environments.

“Claude Code is a naive assistant, with very powerful tooling: the problem is that this combination of naivety and power make it extremely susceptible to suggestion,” the researchers state.

It seems obvious, therefore, that entrusting AI with code generation, test generation, and security review cannot be a robust process for creating secure applications without rigorous human oversight, particularly with the unsolved issues around prompt injection and suggestibility. ®

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![[EVEREST] - Ransomware Victim: MUSE-INSECURE: Inside Collins Aerospaces Security Failure 2 image](https://www.redpacketsecurity.com/wp-content/uploads/2024/09/image-300x300.png)

![Cobalt Strike Beacon Detected - 47[.]109[.]90[.]134:88 4 Cobalt-Strike](https://www.redpacketsecurity.com/wp-content/uploads/2021/11/Cobalt-Strike-300x201.jpg)