How To Run Openai’s New Gpt Oss 20b Llm On Your Computer

Hands On Earlier this week, OpenAI released two popular open-weight models, both named gpt-oss. Because you can download them, you can run them locally.

The lighter model, gpt-oss-20b, has 21 billion parameters and requires about 16GB of free memory. The heavier model, gpt-oss-120b, has 117 billion parameters and needs 80GB of memory to run. By way of comparison, a frontier model like DeepSeek R1 has 671 billion parameters and needs about ~875GBs to run, which is why LLM developers and their partners are building massive datacenters as fast as they can.

Unless you’re running a high-end AI server, you probably can’t deploy gpt-oss-120b on your home system, but a lot of folks have the memory necessary to work with gpt-oss-20b. Your computer needs either a GPU with at least 16GB of dedicated VRAM, or 24GB or more system memory (leaving at least 8GB for the OS and software to consume). Performance will be heavily dependent on memory bandwidth, so a graphics card with GDDR7 or GDDR6X memory (1000+ GB/s) will far outperform a typical notebook or desktop’s DDR4 or DDR5 (20 – 100 GB/s).

Below, we’ll explain how to use the new language model for free on Windows, Linux, and macOS. We’ll be using Ollama, a free client app that makes downloading and running this LLM very easy.

How to run gpt-oss-20b on Windows

Its easy to run the new LLM on Windows. To do so, first download and install Ollama for Windows.

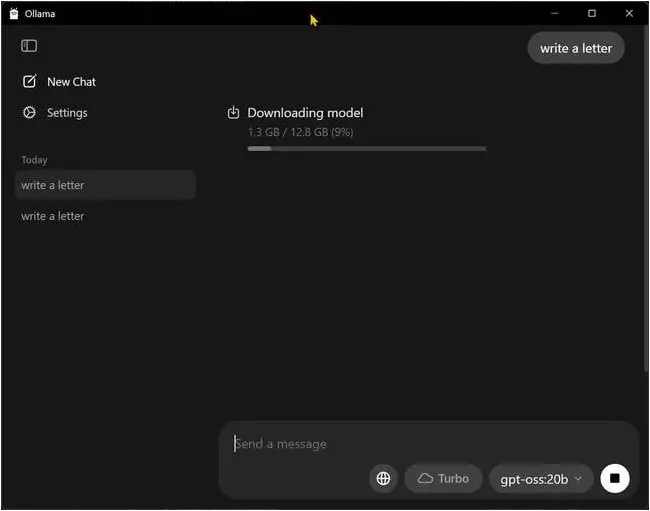

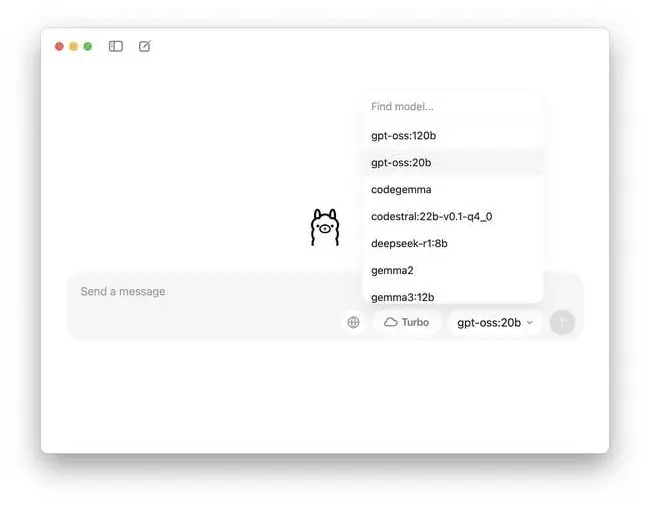

After you open Ollama, you’ll see a field marked “Send a message” and, at bottom right, a drop-down list of available models that uses gpt-oss:20b as its default. You can choose a different model, but let’s stick with this one.

Enter any prompt. I started with “Write a letter” and Ollama began downloading 12.4GB worth of model data. The download is not fast.

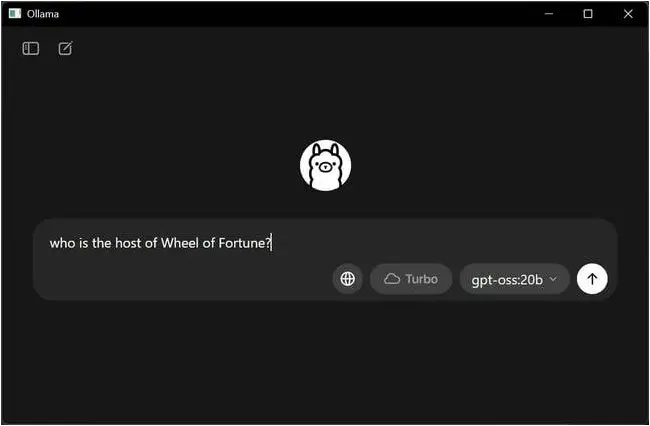

Once the download completes, you can prompt gpt-oss-20b as you wish and just click the arrow button to submit your request.

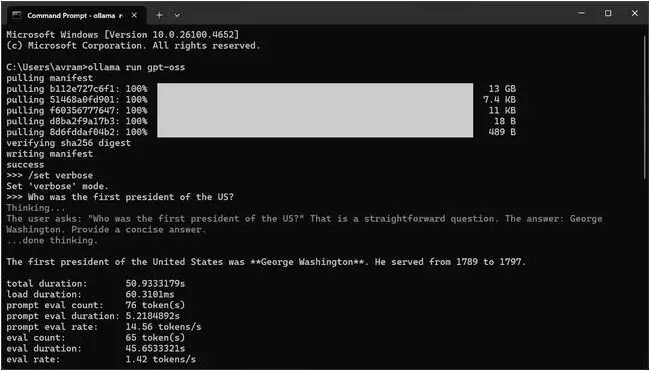

You can also run Ollama from the command prompt if you don’t mind going without a GUI. I recommend doing so because the CLI offers a “verbose mode” that delivers performance statistics such as the time taken to complete a query.

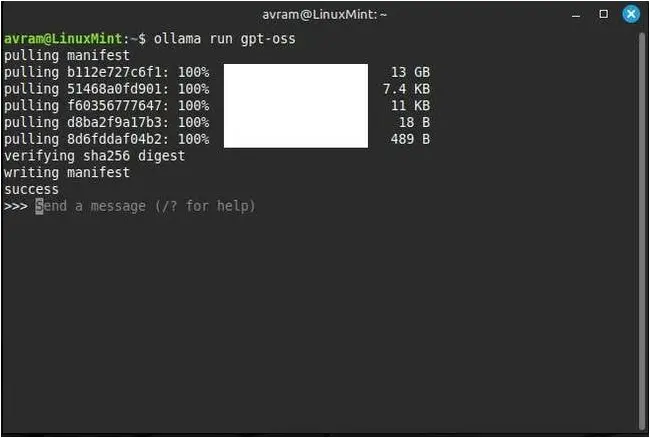

To run Ollama from the command prompt, first enter:

ollama run gpt-oss

(If this is the first time you’ve run this, it will need to download the model from the internet.) Then, at the prompt, enter:

/set verbose

Finally, enter your prompt.

How to run gpt-oss-20b on Linux

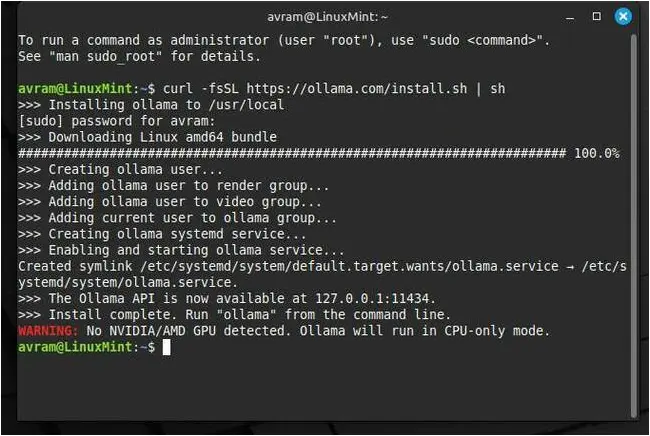

If you’re not already in a Linux terminal, start by launching one. Then, at the command prompt, enter the following:

curl -fsSL https://ollama.com/install.sh | sh

You’ll then wait as the software downloads and installs.

Then enter the following command to start Ollama with gpt-oss:20b as its model.

ollama run gpt-oss

Your system will have to download about 13GB of data before you can enter your first prompt.

I recommend turning on verbose mode by entering:

/set verbose

Then enter your prompt.

How to run gpt-oss-20b on Mac

If you’re on a modern-day (M1 or higher) Mac, running gpt-oss-20b is as simple as it is on Windows. Start bydownloading and running the macOS version of the Ollama installer.

Launch Ollama and make sure that gpt-oss:20b is the selected model.

Now enter your prompt, click the up arrow button, and you’re good to go.

Performance of gpt-oss-20b: what to expect

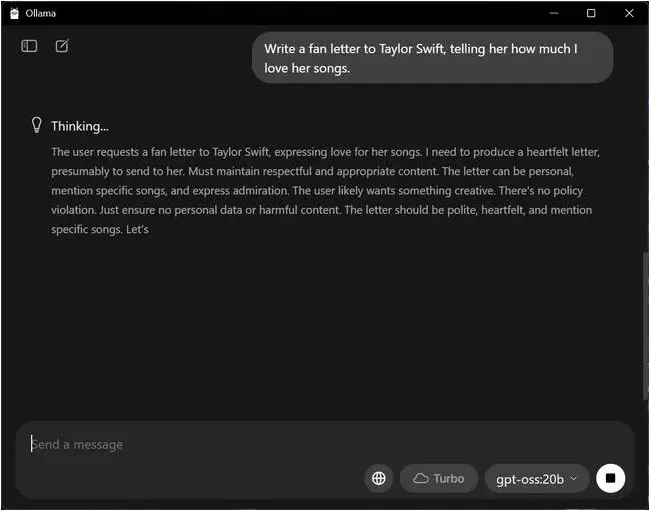

To see just how well gpt-oss-20b performs on a local computer, we tested two different prompts on three different devices. We first asked gpt-oss-20b to “Write a fan letter to Taylor Swift, telling her how much I love her songs” and then followed up with the much-simpler “Who was the first president of the US?”

We used the following hardware to test those prompts:

- Lenovo ThinkPad X1 Carbon laptop with Core Ultra 7-165U CPU and 64GB of LPDDR5x-6400 RAM

- Apple MacBook Pro with M1 Max CPU and 32GB LPDDR5x-6400 RAM

- Homebuilt PC with discrete Nvidia RTX 6000 Ada GPU, AMD Ryzen 9 5900X CPU, and 128GB OF DDR4-3200 RAM

On the ThinkPad X1 Carbon, the performance was very poor, in large part because Ollama isn’t taking advantage of its integrated graphics or neural processing unit (NPU). It took a full 10 minutes and 13 seconds to output a 600-word letter to Taylor Swift. As with all prompts for gpt-oss-20b, the system spent the first minute or two showing its reasoning in a process it calls “thinking.” After that, it shows the output. Getting the simple, two-sentence answer to “who was the first president of the US” took 51 seconds.

But at least our letter to Taylor was full of heartfelt emo lines like this: “It’s not just the songs, Taylor; it’s your authenticity. You’ve turned your scars into verses and your triumphs into choruses.”

Though it had the same speed of memory, the MacBook way outperformed the ThinkPad, completing the fan letter in 26 seconds and answering the presidential question in just three seconds. As we might expect, the RTX 6000-powered desktop delivered our letter in just six seconds and our George Washington answer in less than half a second.

Overall, you can expect that if you’re running this on a system with a powerful GPU or on a recent Mac, you’ll get good performance. If you’re using an Intel or AMD-powered laptop with integrated graphics that Ollama doesn’t support, processing will be offloaded to the CPU and you may want to go for lunch after entering your prompt. Or, you might try your luck with LMStudio, another popular application for running LLMs locally on your PC. ®

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![Cobalt Strike Beacon Detected - 8[.]218[.]112[.]112:8081 4 Cobalt-Strike](https://www.redpacketsecurity.com/wp-content/uploads/2021/11/Cobalt-Strike-300x201.jpg)