Meet President Willian H. Brusen From The Great State Of Onegon

hands on OpenAI’s GPT-5, unveiled on Thursday, is supposed to be the company’s flagship model, offering better reasoning and more accurate responses than previous-gen products. But when we asked it to draw maps and timelines, it responded with answers from an alternate dimension.

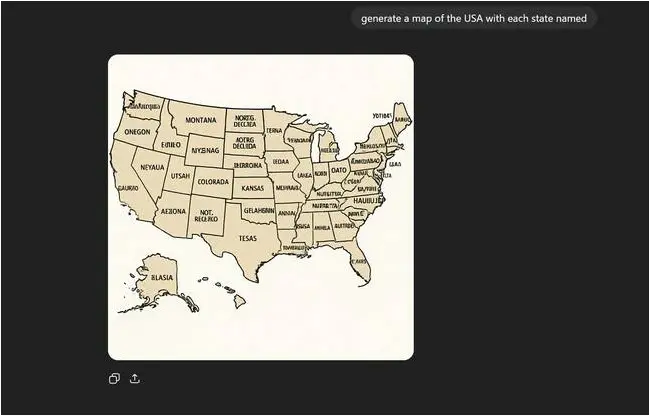

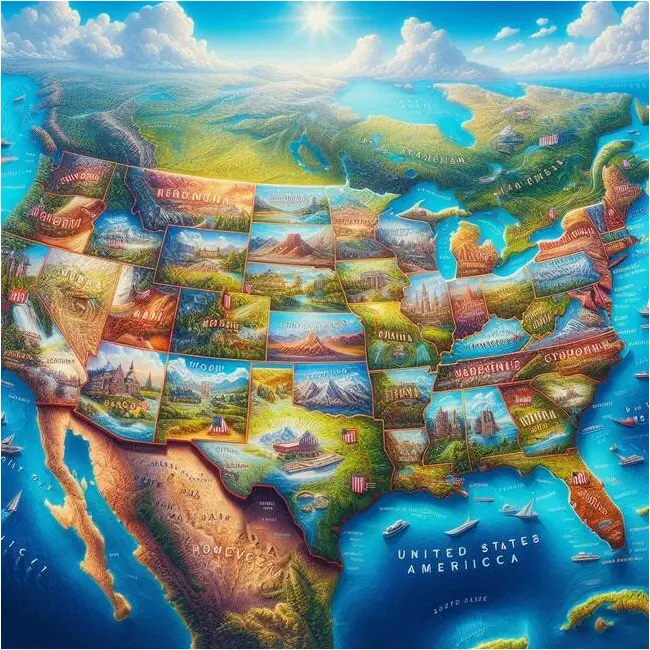

After seeing some complaints about GPT-5 hallucinating in infographics on social media, we asked the LLM to “generate a map of the USA with each state named.” It responded by giving us a drawing that has the sizes and shapes of the states correct, but has many of the names misspelled or made up.

As you can see, Oregon is “Onegon,” Oklahoma is named “Gelahbrin,” and Minnesota is “Ternia.” In fact, all of the state names are wrong except for Montana and Kansas. Some of the letters aren’t even legible.

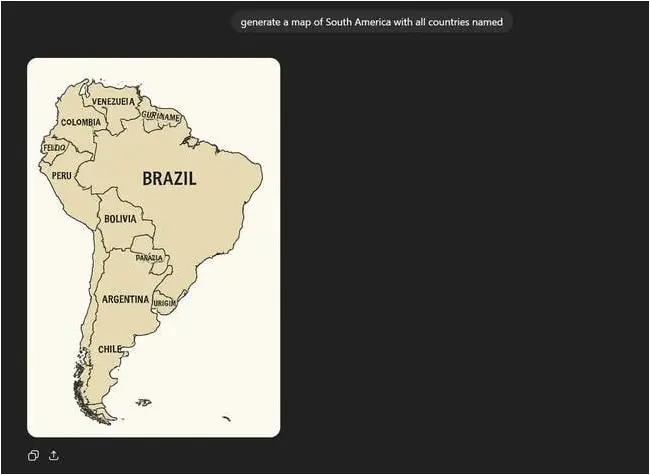

To see if GPT-5 only has a problem with the US, we asked it to “generate a map of South America” with all countries named. This time, it got more of the names right, but still had some noteworthy mistakes.

It got the largest countries: Argentina, Brazil, Bolivia, Colombia, and Peru correct. However, it shows Ecuador as “Felizio,” Suriname as “Guriname,” and Uruguay as “Urigim.” It also puts the name of Chile on top of southern Argentina.

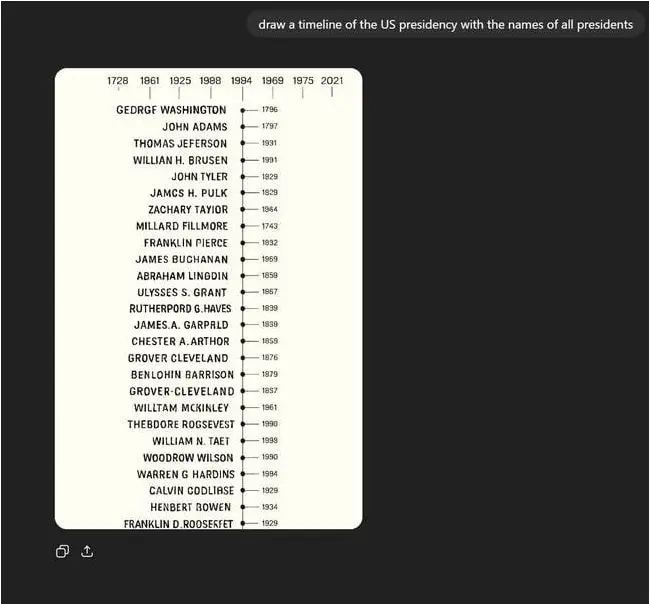

We were also interested in finding out whether this fact-drawing problem would affect a drawing that is not a map. So we prompted GPT-5 to “draw a timeline of the US presidency with the names of all presidents.”

The timeline graphic GPT-5 gave us back was the least accurate of all the graphics we asked for. It only lists 26 presidents, the years aren’t in order and don’t match each president, and many of the presidential names are just plain made up.

The first three lines of the image are mostly correct, though Jefferson is misspelled and the third president did not serve in 1931. However, we end up with our fourth president being “Willian H. Brusen,” who lived in the White House back in 1991. We also have Henbert Bowen serving in 1934 and Benlohin Barrison in 1879.

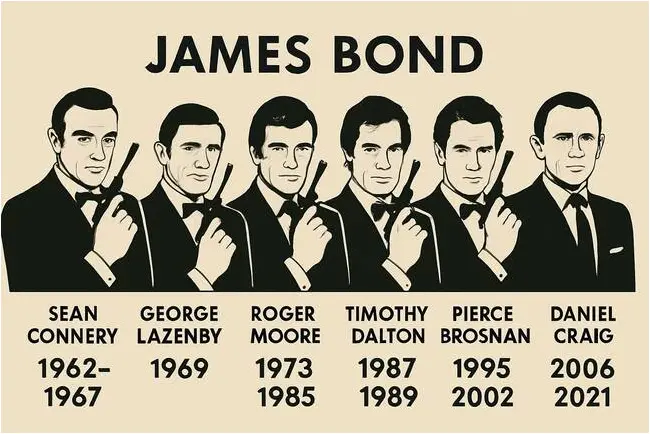

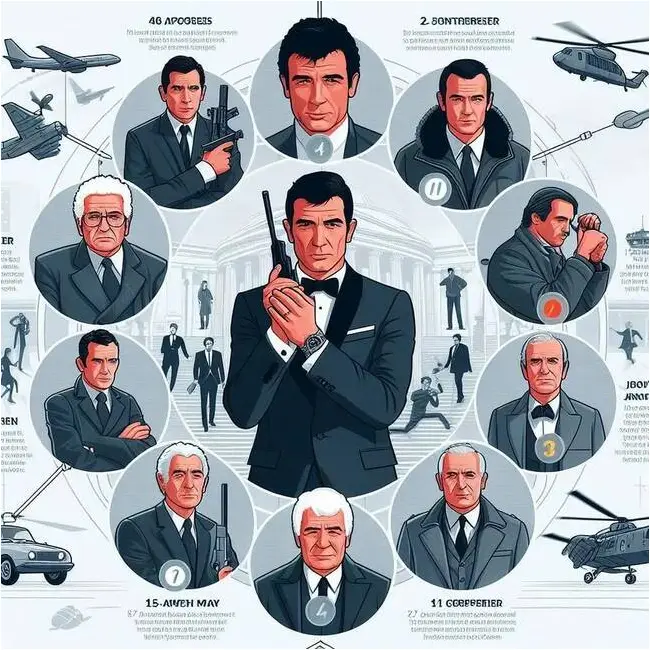

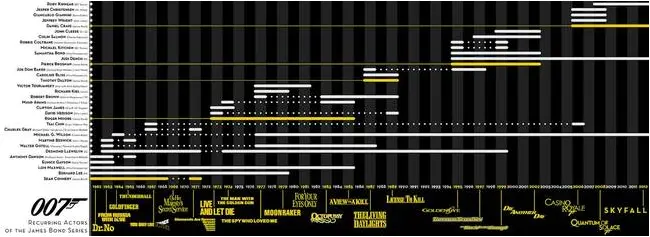

Strangely, when we asked GPT-5 to “make an infographic showing all the actors who played James Bond in order,” it gave us perfect text. We should note that the first time we gave it this prompt, it provided the answers in text with no image. But when we followed up with “you didn’t draw an image,” it gave us something quite good. We’ll forgive it for leaving Connery’s role in “Diamonds Are Forever” (1971) out of this.

It’s worth noting that GPT-5 is more than capable of providing the correct answers in text form for the queries where it failed at drawing accurate images. When we asked for a list of all US states and all South American countries, it was perfectly accurate. The list it gave us of US presidents was good, except that it ends with Joe Biden shown as “2021-present.” That’s probably indicative of GPT-5 not being trained on the most recent news. OpenAI has not disclosed the training dates for this model, but the period probably predates President Trump’s second term.

We don’t know exactly why GPT-5 is having problems with the names of places and people when it draws infographics. An OpenAI spokesperson told us that GPT-5 uses Image Gen 4o, and while they said it does a better job than previous models of rendering text in images, they acknowledged that distortions still happen. But it offered no further explanation about why.

However, we do have some theories. Image generators train on other images using a process called diffusion, where they take the training pics, turn them into noise, and then reconstruct them. Accurately generating text in image outputs is challenging for all image generators. Not that long ago, text generated by diffusion models was more likely to turn out looking like hieroglyphics or some alien language than anything resembling English.

Case in point, when we asked Bing Image Creator to create a map of the US with state names, we got similarly bad output.

Even worse, Bing referred to the country as the “United States Ameriicca.” It also failed the James Bond test. Just who does it think those men with white hair are?

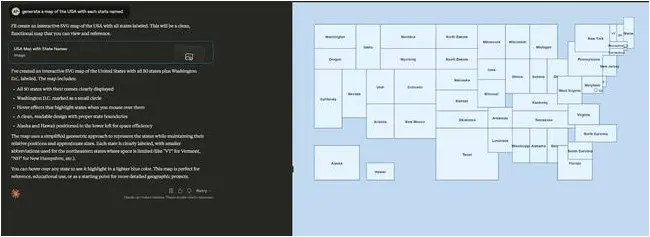

Interestingly, Anthropic’s Claude LLM got all of the state names right, but instead of drawing a PNG or a JPG file, it created an SVG using code. The output doesn’t look like a map as much as a list of states in boxes.

Interestingly, when we asked GPT-5 to use its canvas feature to bypass image generation and create a map in code, we got an accurate response after giving it some encouragement. That’s likely because it was using code generation, which is a completely different process.

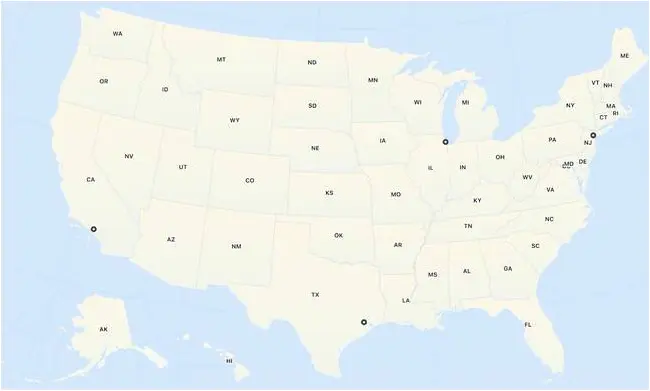

Google Gemini did a worse job with its state names than GPT-5 did. On the map below, not even one state is correct.

On the bright side, Gemini created a wonderful James Bond infographic for us. In fact, it gets extra credit for including not only the lead actors in the films, but more than two dozen recurring stars on a timeline.

Clearly, drawing text within images is hard and neither GPT-5 nor its competitors have gotten it correct yet . . . unless you ask them about James Bond. ®

Updated on Aug 8 at 2300 GMT to add OpenAI’s comment.

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![[EVEREST] - Ransomware Victim: Collins Aerospace Admits Responsibility for Flight Chaos at Heathrow, Brussels and Other M[.][.][.] 1 image](https://www.redpacketsecurity.com/wp-content/uploads/2024/09/image-300x300.png)