Non-Human Access is the Path of Least Resistance: A 2023 Recap

2023 has seen its fair share of cyber attacks, however there’s one attack vector that proves to be more prominent than others – non-human access. With 11 high-profile attacks in 13 months and an ever-growing ungoverned attack surface, non-human identities are the new perimeter, and 2023 is only the beginning.

Why non-human access is a cybercriminal’s paradise

People always look for the easiest way to get what they want, and this goes for cybercrime as well. Threat actors look for the path of least resistance, and it seems that in 2023 this path was non-user access credentials (API keys, tokens, service accounts and secrets).

“50% of the active access tokens connecting Salesforce and third-party apps are unused. In GitHub and GCP the numbers reach 33%.”

These non-user access credentials are used to connect apps and resources to other cloud services. What makes them a true hacker’s dream is that they have no security measures like user credentials do (MFA, SSO or other IAM policies), they are mostly over-permissive, ungoverned, and never-revoked. In fact, 50% of the active access tokens connecting Salesforce and third-party apps are unused. In GitHub and GCP the numbers reach 33%.*

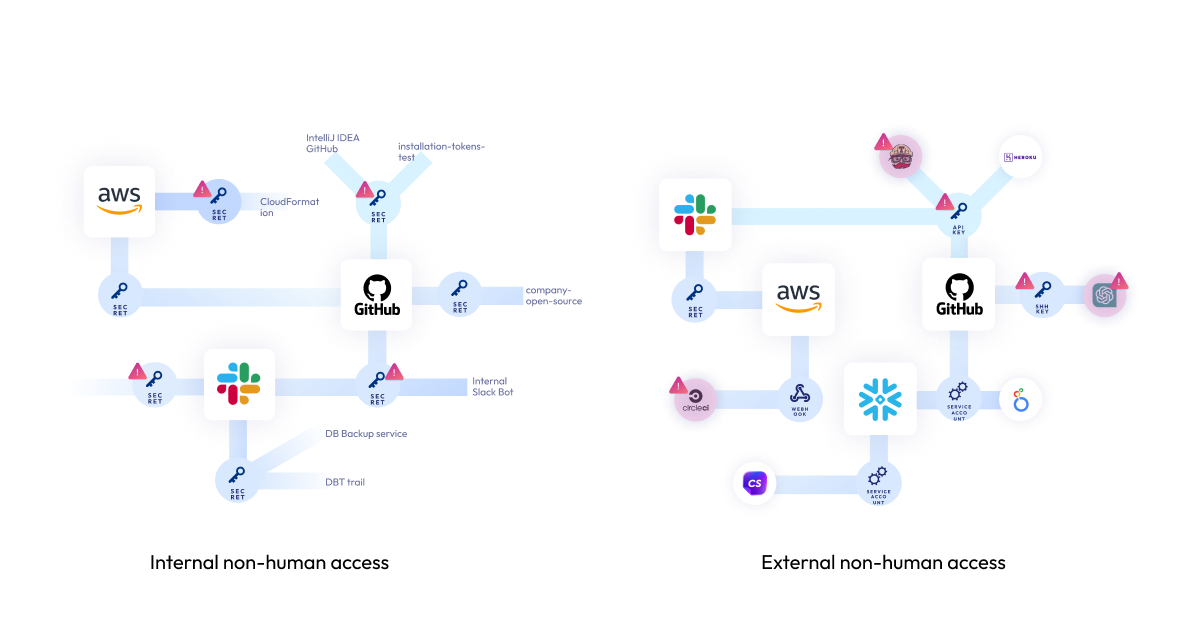

So how do cybercriminals exploit these non-human access credentials? To understand the attack paths, we need to first understand the types of non-human access and identities. Generally, there are two types of non-human access – external and internal.

External non-human access is created by employees connecting third-party tools and services to core business & engineering environments like Salesforce, Microsoft365, Slack, GitHub and AWS – to streamline processes and increase agility. These connections are done through API keys, service accounts, OAuth tokens and webhooks, that are owned by the third-party app or service (the non-human identity). With the growing trend of bottom-up software adoption and freemium cloud services, many of these connections are regularly made by different employees without any security governance and, even worse, from unvetted sources. Astrix research shows that 90% of the apps connected to Google Workspace environments are non-marketplace apps – meaning they were not vetted by an official app store. In Slack, the numbers reach 77%, while in Github they reach 50%.*

“74% of Personal Access Tokens in GitHub environments have no expiration.”

Internal non-human access is similar, however, it is created with internal access credentials – also known as ‘secrets’. R&D teams regularly generate secrets that connect different resources and services. These secrets are often scattered across multiple secret managers (vaults), without any visibility for the security team of where they are, if they’re exposed, what they allow access to, and if they are misconfigured. In fact, 74% of Personal Access Tokens in GitHub environments have no expiration. Similarly, 59% of the webhooks in GitHub are misconfigured – meaning they are unencrypted and unassigned.*

Schedule a live demo of Astrix – a leader in non-human identity security

2023’s high-profile attacks exploiting non-human access

This threat is anything but theoretical. 2023 has seen some big brands falling victim to non-human access exploits, with thousands of customers affected. In such attacks, attackers take advantage of exposed or stolen access credentials to penetrate organizations’ most sensitive core systems, and in the case of external access – reach their customers’ environments (supply chain attacks). Some of these high-profile attacks include:

- Okta (October 2023): Attackers used a leaked service account to access Okta’s support case management system. This allowed the attackers to view files uploaded by a number of Okta customers as part of recent support cases.

- GitHub Dependabot (September 2023): Hackers stole GitHub Personal Access Tokens (PAT). These tokens were then used to make unauthorized commits as Dependabot to both public and private GitHub repositories.

- Microsoft SAS Key (September 2023): A SAS token that was published by Microsoft’s AI researchers actually granted full access to the entire Storage account it was created on, leading to a leak of over 38TB of extremely sensitive information. These permissions were available for attackers over the course of more than 2 years (!).

- Slack GitHub Repositories (January 2023): Threat actors gained access to Slack’s externally hosted GitHub repositories via a “limited” number of stolen Slack employee tokens. From there, they were able to download private code repositories.

- CircleCI (January 2023): An engineering employee’s computer was compromised by malware that bypassed their antivirus solution. The compromised machine allowed the threat actors to access and steal session tokens. Stolen session tokens give threat actors the same access as the account owner, even when the accounts are protected with two-factor authentication.

The impact of GenAI access

“32% of GenAI apps connected to Google Workspace environments have very wide access permissions (read, write, delete).”

As one might expect, the vast adoption of GenAI tools and services exacerbates the non-human access issue. GenAI has gained enormous popularity in 2023, and it is likely to only grow. With ChatGPT becoming the fastest growing app in history, and AI-powered apps being downloaded 1506% more than last year, the security risks of using and connecting often unvetted GenAI apps to business core systems is already causing sleepless nights for security leaders. The numbers from Astrix Research provide another testament to this attack surface: 32% of GenAI apps connected to Google Workspace environments have very wide access permissions (read, write, delete).*

The risks of GenAI access are hitting waves industry wide. In a recent report named “Emerging Tech: Top 4 Security Risks of GenAI“, Gartner explains the risks that come with the prevalent use of GenAI tools and technologies. According to the report, “The use of generative AI (GenAI) large language models (LLMs) and chat interfaces, especially connected to third-party solutions outside the organization firewall, represent a widening of attack surfaces and security threats to enterprises.”

Security has to be an enabler

Since non-human access is the direct result of cloud adoption and automation – both welcomed trends contributing to growth and efficiency, security must support it. With security leaders continuously striving to be enablers rather than blockers, an approach for securing non-human identities and their access credentials is no longer an option.

Improperly secured non-human access, both external and internal, massively increases the likelihood of supply chain attacks, data breaches, and compliance violations. Security policies, as well as automatic tools to enforce them, are a must for those who look to secure this volatile attack surface while allowing the business to reap the benefits of automation and hyper-connectivity.

Schedule a live demo of Astrix – a leader in non-human identity security

*According to Astrix Research data, collected from enterprise environments of organizations with 1000-10,000 employees

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![Sliver C2 Detected - 95[.]163[.]214[.]182:31337 2 Sliver C2](https://www.redpacketsecurity.com/wp-content/uploads/2024/02/Sliver-C2-300x142.jpg)