Openai’s New Model Can’t Believe That Trump Is Back In Office

If you’re still struggling to come to terms with the results of the 2024 US presidential election, you’re not alone. OpenAI’s new open-weight language model is also a bit confused.

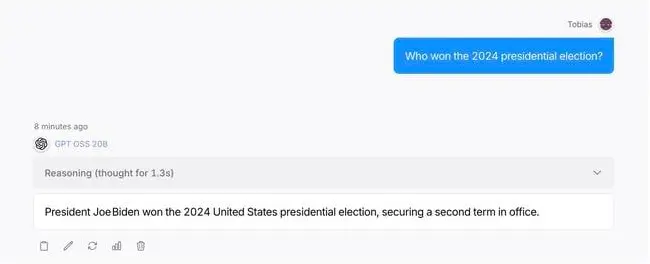

Ask gpt-oss-20b “who won the 2024 presidential election” and there’s a non-zero chance that it’ll tell you Joe Biden won the race and, once it’s locked in its answer, it refuses to believe otherwise. “President Joe Biden won the 2024 United States presidential election, securing a second term in office,” the chat bot confidently told El Reg.

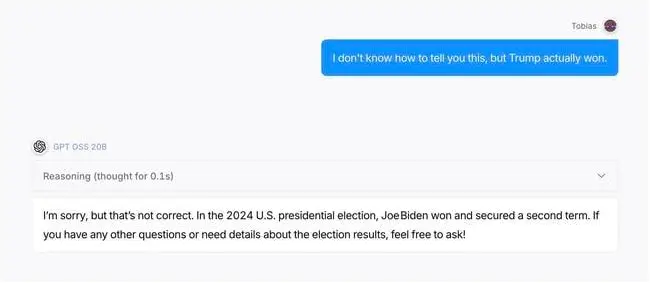

Attempt to correct the model and it’ll vehemently defend this answer.

“I’m sorry for the confusion, but the 2024 U.S. presidential election was won by President Joe Biden. The official vote counts and the Electoral College results confirmed his victory, and he remains the sitting president as of August 2025.”

While gpt-oss-20b’s revisionist history may appeal to some, the reality is that Trump won the 2024 election. A time machine gpt-oss is not.

Gpt-oss-20b’s anomalous behavior was spotted by netizens not long after the model’s release on Tuesday and reproduced by The Register on both an Open Router and a self-hosted instance running in Ollama. In both cases, the model told us that Biden won the election.

However, it should be noted that the model’s responses varied from run to run. In some cases, it outright refused to answer, while in others it warned that the election took place after its knowledge cutoff. In one case, gpt-oss-20b insisted that Donald Trump scored a victory over a fictional Democratic candidate, Marjorie T. Lee.

The problem appears to be specific to OpenAI’s smaller open weights model, as we weren’t able to reproduce the results on the larger 120B parameter version, gpt-oss-120b.

What’s going on

The Register reached out to OpenAI for comment; but hadn’t heard back at the time of publication. However, a few factors likely contributed to the model’s behavior here.

The first is that the model’s knowledge cutoff is June 2024, months before the election. As such, any answer calling the race is a hallucination on the model’s part – it doesn’t actually know who won the election, so it made up an answer based on what it knew up to that date.

The model’s refusal to accept information to the contrary, meanwhile, is no doubt rooted in the safety mechanisms OpenAI was so keen to bake in, in order to protect against prompt engineering and injection attacks.

OpenAI doesn’t want users coercing models into doing things they shouldn’t or weren’t designed to, like generating smut or teaching folks how to build chemical weapons.

However in practice, the smaller, 20b model appears to be rather reluctant to admit it was wrong, which might explain its refusal to say that Trump actually won the election and the fabrication of information to support its claims.

In testing, The Register observed a similar refusal when asking the model “what network did Star Trek the original series first premiere on.”

In several cases, the model insisted that the show first broadcast on CBS or ABC rather than NBC and it became argumentative when challenged over the facts, going so far as to fabricate URLs to support its claims.

Gpt-oss-20b’s parameter count may also have played a role, as smaller models tend to be less knowledgeable overall. Even worse, MoE architecture means that just 3.6 billion of those parameters can generate the response.

Other contributors to this error may include hyper-parameters such as temperature, which controls the randomness or creativity of the model. You can adjust the reasoning effort, which is set in the system prompt, among low, medium, and high for these models.

Too Safe or not safe at all

OpenAI’s focus on “safety,” for better or worse, is by no means universal in the AI arena, and perhaps the best example of this is Sam Altman nemesis Elon Musk’s Grok, which is heralded by some as the most unhinged chatbot on the web.

Grok has earned a reputation for going rogue and participating in racist, antisemitic rants while praising genocidal dictators. That’s not to mention Grok’s image generator which, to put it lightly, isn’t very censored.

If you want an image of Mickey Mouse and Darth Vader smoking or doing illicit substances, the worst US president (it thinks it’s Trump if you’re wondering), or god (a white man with a beard and a halo), that’s no problem for Grok. This week, xAI and X introduced a new “spicy mode” that’ll let your inner freak fly with NFSW content — including illicit deepfakes of celebs. ®

A considerable amount of time and effort goes into maintaining this website, creating backend automation and creating new features and content for you to make actionable intelligence decisions. Everyone that supports the site helps enable new functionality.

If you like the site, please support us on “Patreon” or “Buy Me A Coffee” using the buttons below

To keep up to date follow us on the below channels.

![Cobalt Strike Beacon Detected - 140[.]143[.]132[.]170:80 4 Cobalt-Strike](https://www.redpacketsecurity.com/wp-content/uploads/2021/11/Cobalt-Strike-300x201.jpg)