Indicators of compromise (IOCs): how we collect and use them

It would hardly be an exaggeration to say that the phrase “indicators of compromise” (or IOCs) can be found in every report published on the Securelist. Usually after the phrase there are MD5 hashes[1], IP addresses and other technical data that should help information security specialists to counter a specific threat. But how exactly can indicators of compromise help them in their everyday work? To find the answer we asked three Kaspersky experts: Pierre Delcher, Senior Security Researcher in GReAT, Roman Nazarov, Head of SOC Consulting Services, and Konstantin Sapronov, Head of Global Emergency Response Team, to share their experience.

What is cyber threat intelligence, and how do we use it in GReAT?

We at GReAT are focused on identifying, analyzing and describing upcoming or ongoing, preferably unknown cyberthreats, to provide our customers with detailed reports, technical data feeds, and products. This is what we call cyber threat intelligence. It is a highly demanding activity, which requires time, multidisciplinary skills, efficient technology, innovation and dedication. It also requires a large and representative set of knowledge about cyberattacks, threat actors and associated tools over an extended timeframe. We have been doing so since 2008, benefiting from Kaspersky’s decades of cyberthreat data management, and unrivaled technologies. But why are we offering cyber threat intelligence at all?

The intelligence we provide on cyberthreats is composed of contextual information (such as targeted organization types, threat actor techniques, tactics and procedures – or TTPs – and attribution hints), detailed analysis of malicious tools that are exploited, as well as indicators of compromise and detection techniques. This in effect offers knowledge, enables anticipation, and supports three main global goals in an ever-growing threats landscape:

- Strategic level: helping organizations decide how many of their resources they should invest in cybersecurity. This is made possible by the contextual information we provide, which in turn makes it possible to anticipate how likely an organization is to be targeted, and what the capabilities are of the adversaries.

- Operational level: helping organizations decide where to focus their existing cybersecurity efforts and capabilities. No organization in the world can claim to have limitless cybersecurity resources and be able to prevent or stop every kind of threat! Detailed knowledge on threat actors and their tactics makes it possible for a given sector of activity to focus prevention, protection, detection and response capabilities on what is currently being (or will be) targeted.

- Tactical level: helping organizations decide how relevant threats should be technically detected, sorted, and hunted for, so they can be prevented or stopped in a timely fashion. This is made possible by the descriptions of standardized attack techniques and the detailed indicators of compromise we provide, which are strongly backed by our own widely recognized analysis capabilities.

What are indicators of compromise?

To keep it practical, indicators of compromise (IOCs) are technical data that can be used to reliably identify malicious activities or tools, such as malicious infrastructure (hostnames, domain names, IP addresses), communications (URLs, network communications patterns, etc.) or implants (hashes that designate files, files paths, Windows registry keys, artifacts that are written in memory by malicious processes, etc.). While most of them will in practice be file hashes – designating samples of malicious implants – and domain names – identifying malicious infrastructure, such as command and control (C&C) servers – their nature, format and representation are not limited. Some IOC sharing standards exist, such as STIX.

As mentioned before, IOCs are one result of cyber threat intelligence activities. They are useful at operational and tactical levels to identify malicious items and help associate them with known threats. IOCs are provided to customers in intelligence reports and in technical data feeds (which can be consumed by automatic systems), as well as further integrated in Kaspersky products or services (such as sandbox analysis products, Kaspersky Security Network, endpoint and network detection products, and the Open Threat Intelligence Portal to some extent).

How does GReAT identify IOCs?

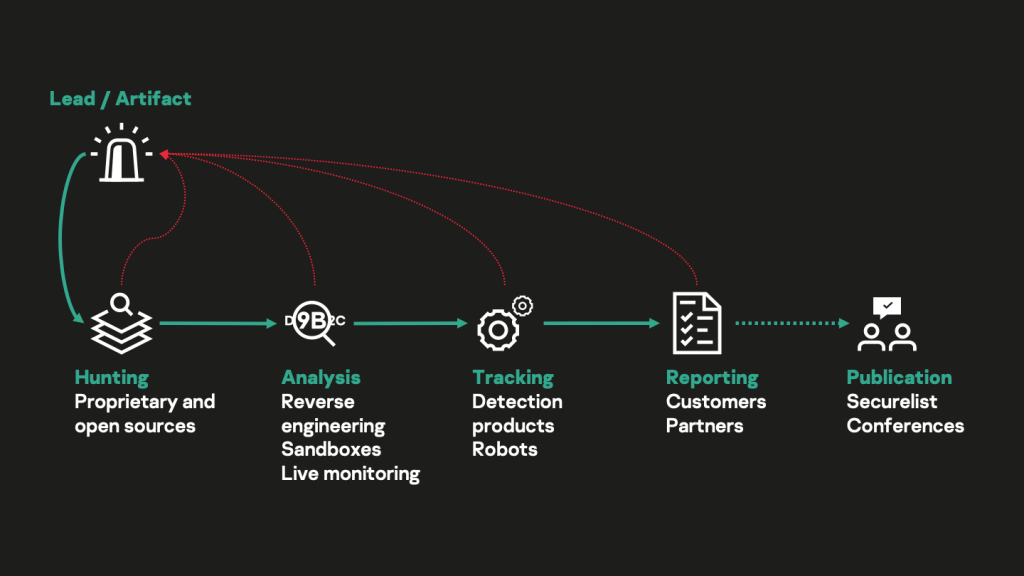

As part of the threat-hunting process, which is one facet of cyber threat intelligence activity (see the picture below), GReAT aims at gathering as many IOCs as possible about a given threat or threat actor, so that customers can in turn reliably identify or hunt for them, while benefiting from maximum flexibility, depending on their capabilities and tools. But how are those IOCs identified and gathered? The rule of thumb on threat hunting and malicious artifacts collection is that there is no such thing as a fixed magic recipe: several sources, techniques and practices will be combined, on a case-by-case basis. The more comprehensively those distinct sources are researched, the more thoroughly analysis practices are executed, and the more IOCs will be gathered. Those research activities and practices can only be efficiently orchestrated by knowledgeable analysts, who are backed by extensive data sources and solid technologies.

General overview of GReAT cyber threat intelligence activities

GReAT analysts will leverage the following sources of collection and analysis practices to gather intelligence, including IOCs – while these are the most common, actual practices vary and are only limited by creativity or practical availability:

- In-house technical data: this includes various detection statistics (often designated as “telemetry”[2]), proprietary files, logs and data collections that have been built across time, as well as the custom systems and tools that make it possible to query them. This is the most precious source of intelligence as it provides unique and reliable data from trusted systems and technologies. By searching for any previously known IOC (e.g., a file hash or an IP address) in such proprietary data, analysts can find associated malicious tactics, behaviors, files and details of communications that directly lead to additional IOCs, or will produce them through analysis. Kaspersky’s private Threat Intelligence Portal (TIP), which is available to customers as a service, offers limited access to such in-house technical data.

- Open and commercially available data: this includes various services, file data collections that are publicly available, or sold by third parties, such as online file scanning services (e.g., VirusTotal), network system search engines (e.g., Onyphe), passive DNS databases, public sandbox reports, etc. Analysts can search those sources the same way as proprietary data. While some of these data are conveniently available to anyone, information about the collection or execution context, the primary source of information, or data processing details are often not provided. As a result, such sources cannot be trusted by GReAT analysts as much as in-house technical data.

- Cooperation: by sharing intelligence and developing relationships with trusted partners such as peers, digital service providers, computer security incident response teams (CSIRTs), non-profit organizations, governmental cybersecurity agencies, or even some Kaspersky customers, GReAT analysts can sometimes acquire additional knowledge in return. This vital part of cyber threat intelligence activity enables a global response to cyberthreats, broader knowledge of threat actors, and additional research that would not otherwise be possible.

- Analysis: this includes automated and human-driven activities that consist of thoroughly dissecting gathered malicious artifacts, such as malicious implants, or memory snapshots, in order to extract additional intelligence from them. Such activities include reverse-engineering or live malicious implant execution in controlled environments. By doing so, analysts will often be able to discover concealed (obfuscated, encrypted) indicators, such as command and control infrastructure for malware, unique development practices from malware authors, or additional malicious tools that are delivered by a threat actor as an additional stage of an attack.

- Active research: this includes specific threat research operations, tools, or systems (sometimes called “robots”) that are built by analysts with the specific intent to continuously look for live malicious activities, using generic and heuristic approaches. Those operations, tools or systems include, but are not limited to, honeypots[3], sinkholing[4], internet scanning and some specific behavioral detection methods from endpoint and network detection products.

How does GReAT consume IOCs?

Sure, GReAT provides IOCs to customers, or even to the public, as part of its cyber threat intelligence activities. However, before providing them, the IOCs are also a cornerstone of the intelligence collection practices described here. IOCs enable GReAT analysts to pivot from an analyzed malicious implant to additional file detection, from a search in one intelligence source to another. IOCs are thus the common technical interface to all research processes. As an example, one of our active research heuristics might identify previously unknown malware being tentatively executed on a customer system. Looking for the file hash in our telemetry might help identify additional execution attempts, as well as malicious tools that were leveraged by a threat actor, just before the first execution attempt. Reverse engineering of the identified malicious tools might in turn produce additional network IOCs, such as malicious communication patterns or command and control server IP addresses. Looking for the latter in our telemetry will help identify additional files, which in turn will enable further file hash research. IOCs enable a continuous research cycle to spin, but it only begins at GReAT; by providing IOCs as part of an intelligence service, GReAT expects the cycle to keep spinning at the customer’s side.

Apart from their direct use as research tokens, IOCs are also carefully categorized, attached to malicious campaigns or threat actors, and utilized by GReAT when leveraging internal tools. This IOC management process allows for two major and closely related follow-up activities:

- Threat tracking: by associating known IOCs (as well as advanced detection signatures and techniques) to malicious campaigns or threat actors, GReAT analysts can automatically monitor and sort detected malicious activities. This simplifies any subsequent threat hunting or investigation, by providing a research baseline of existing associated IOCs for most new detections.

- Threat attribution: by comparing newly found or extracted IOCs to utilized IOCs, GReAT analysts can quickly establish links from unknown activities to define malicious campaigns or threat actors. While a common IOC between a known campaign and newly detected activity is never enough to draw any attribution conclusion, it’s always a helpful lead to follow.

IOC usage scenarios in SOCs

From our perspective, every security operation center (SOC) uses known indicators of compromise in its operations, one way or another. But before we talk about IOC usage scenarios, let’s go with the simple idea that an IOC is a sign of a known attack.

At Kaspersky, we advise and design SOC operations for different industries and in different formats, but IOC usage and management are always part of the SOC framework we suggest our customers implement. Let’s highlight some usage scenarios of IOCs in an SOC.

Prevention

In general, today’s SOC best practices tell us to defend against attacks by blocking potential threats at the earliest possible stage. If we know the exact indicators of an attack, then we can block it, and it is better to do so on multiple levels (both network and endpoint) of our protected environment. In other words, the concept of defense in depth. Blocking any attempts to connect to a malicious IP, resolve a C2 FQDN, or run malware with a known hash should prevent an attack, or at least not give the attacker an easy target. It also saves time for SOC analysts and reduces the noise of SOC false positive alerts. Unfortunately, an excellent level of IOC confidence is vital for the prevention usage scenario; otherwise, a huge number of false positive blocking rules will affect the business functions of the protected environment.

Detection

The most popular scenario for IOC usage in an SOC – the automatic matching of our infrastructure telemetry with a huge collection of all possible IOCs. And the most common place to do it is SIEM. This scenario is popular for a number of reasons:

- SIEM records multiple types of logs, meaning we can match the same IOC with multiple log types, e.g., domain name with DNS requests, those received from corporate DNS servers, and requested URLs obtained from the proxy.

- Matching in SIEM helps us to provide extended context for analyzed events: in the alert’s additional information an analyst can see why the IOC-based alert was triggered, the type of threat, confidence level, etc.

- Having all the data on one platform can reduce the workload for the SOC team to maintain infrastructure and prevent the need to organize additional event routing, as described in the following case.

Another popular solution for matching is the Threat Intelligence Platform (TIP). It usually supports better matching scenarios (such as the usage of wildcards) and assumes reducing some of the performance impact generated by correlation in SIEM. Another huge advantage of TIP is that this type of solution was initially designed to work with IOCs, to support their data schema and manage them, with more flexibility and features to set up detection logic based on an IOC.

When it comes to detection we usually have a lower requirement for IOC confidence because, although unwanted, false positives during detection are a common occurrence.

Investigation

Another routine where we work with IOCs is in the investigation phase of any incident. In this case, we are usually limited to a specific subset of IOCs – those that were revealed within the particular incident. These IOCs are needed to identify additional affected targets in our environment to define the real scope of the incident. Basically, the SOC team has a loop of IOC re-usage:

- Identify incident-related IOC

- Search for IOC on additional hosts

- Identify additional IOC on revealed targets, repeat step 2.

Containment, Eradication and Recovery

The next steps of incident handling also apply IOCs. In these stages the SOC team focuses on IOCs obtained from the incident, and uses them in the following way:

- Containment – limit attacker abilities to act by blocking identified IOCs

- Eradication and Recovery – control the lack of IOC-related behavior to verify that the eradication and recovery phases were completed successfully and the attacker’s presence in the environment was fully eliminated.

Threat Hunting

By threat hunting we imply activity aimed at revealing threats, namely those that have bypassed SOC prevention and detection capabilities. Or, in other words, Assume Breach Paradigm, which intends that despite all the prevention and detection capabilities, it’s possible that we have missed a successful attack and have to analyze our infrastructure as though it is compromised and find traces of the breach.

This brings us to IOC-based threat hunting. The SOC team analyzes information related to the attack and evaluates if the threat is applicable to the protected environment. If yes, the hunter tries to find an IOC in past events (such as DNS queries, IP connection attempts, and processes execution), or in the infrastructure itself – the presence of a specific file in the system, a specific value of registry key, etc. The typical solutions supporting the SOC team with such activity are SIEM, EDR and TIP. For this type of scenario, the most suitable IOCs are those extracted from APT reports, or received by your TI peers.

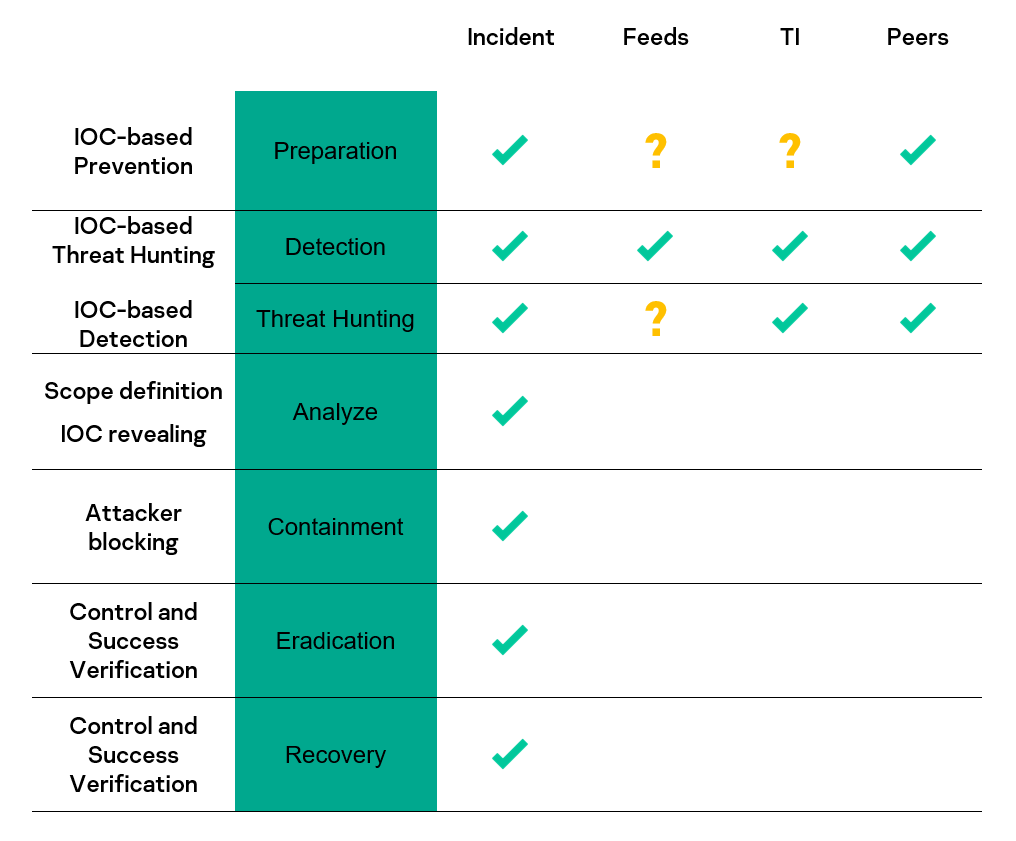

In IOC usage scenarios we have touched on different types of IOCs multiple times. Let’s summarize this information by breaking down IOC types according to their origin:

- Provider feeds – subscription for IOC provided by security vendors in the form of a feed. Usually contains a huge number of IOCs observed in different attacks. The level of confidence varies from vendor to vendor, and the SOC team should consider vendor specialization and geo profile to utilize actual IOCs. Usage feeds for prevention and threat hunting are questionable due to the potentially high level of false positives.

- Incident IOCs – IOC generated by the SOC team during analysis of security incidents. Usually, the most trusted type of IOC.

- Threat intelligence IOCs – a huge family of IOCs generated by the TI team. The quality depends directly on the level of expertise of your TI Analysts. The usage of TI IOCs for prevention depends heavily on the TI data quality and can trigger too many false positives, and therefore impact business operation.

- Peer IOCs – IOCs provided by peer organizations, government entities, and professional communities. Can usually be considered as a subset of TI IOCs or incident IOCs, depending on the nature of your peers.

If we summarize the reviewed scenarios, IOC origin, and their applicability, then map this information to NIST Incident Handling stages[5], we can create the following table.

IOC scenario usage in SOC

All these scenarios have different requirements for the quality of IOCs. Usually, in our SOC we don’t have too many issues with Incident IOCs, but for the rest we must track quality and manage it in some way. For better quality management, the provided metrics should be aggregated for every IOC origin to evaluate IOC source, not the dedicated IOCs. Some basic metrics, identified by our SOC Consultancy team, that can be implemented to measure IOC quality are:

- Conversion to incident – which proportion of IOCs has triggered a real incident. Applied for detection scenarios

- FP rate – false positive rate generated by IOC. Works for detection and prevention

- Uniqueness – applied for IOC source and tells the SOC team how unique the set of provided IOCs is compared to other providers

- Aging – whether an IOC source provides up-to-date IOCs or not

- Amount – number of provided IOCs by source

- Context information – usability and fullness of context provided with IOCs

To collect these metrics, the SOC team should carefully track every IOC origin, usage scenario and the result of use.

How does the GERT team use IOCs in its work?

In GERT we specialize in the investigation of incidents and the main sources of information in our work are digital artifacts. By analyzing them, experts discover data that identifies activity directly related to the incident under investigation. Thus, the indicators of compromise allow experts to establish a link between the investigated object and the incident.

Throughout the entire cycle of responding to information security incidents, we use different IOCs at different stages. As a rule, when an incident occurs and a victim is contacted, we receive indicators of compromise that can serve to confirm the incident, attribute the incident to an attacker and make decisions on the initial response steps. For example, if we consider one of the most common incidents involving ransomware, then the initial artifact is the files. The IOC indicators in this case will be the file names or their extensions, as well as the hash of the sum of the files. Such initial indicators make it possible to determine the type of cryptographer, to point to a group of attackers and their characteristic techniques, tactics and procedures. They also make it possible to define recommendations for an initial response.

The next set of IOCs that we can get are indicators from the data collected by triage. As a rule, these indicators show the attackers’ progress through the network and allow additional systems involved in the incident to be identified. Mostly, these are the names of compromised users, hash sums of malicious files, IP addresses and URL links. Here it is necessary to note the difficulties that arise. Attackers often use legitimate software that is already installed on the targeted systems (LOLBins). In this case, it is more difficult to distinguish malicious launches of such software from legitimate launches. For example, the mere fact that the PowerShell interpreter is running cannot be considered without context and payload. In such cases it is necessary to use other indicators such as timestamps, user name, correlation of events.

Ultimately, all identified IOCs are used to identify compromised network resources and to block the actions of attackers. In addition, attack indicators are built on the basis of compromise indicators, which are used for preventive detection of attackers. In the final stage of the response, the indicators that are found are used to verify there are no more traces of the attackers’ presence in the network.

The result of each completed case with an investigation is a report that collects all the indicators of compromise and indicators of attack based on them. Monitoring teams should add these indicators to their monitoring systems and use them to proactively detect threats.

[1] A “hash” is a relatively short, fixed-length, sufficiently unique and non-reversible representation of arbitrary data, which is the result of a “hashing algorithm” (a mathematical function, such as MD5 or SHA-256). Contents of two identical files that are processed by the same algorithm result in the same hash. However, processing two files which only differ slightly will result in two completely different hashes. As a hash is a short representation, it is more convenient to designate (or look for) a given file using its hash than using its whole content.

[2] Telemetry designates the detection statistics and malicious files that are sent from detection products to the Kaspersky Security Network when customers agree to participate.

[3] Vulnerable, weak, and/or attractive systems that are deliberately exposed to Internet and continuously monitored in a controlled fashion, with the expectation that they will be attacked by threat actors. When such happens, monitoring practices enable detecting new threats, exploitation methods or tools from attackers.

[4] Hijacking of known malicious command and control servers, with cooperation from Internet hosters or leveraging threat actors errors and infrastructure desertion, in order to neutralize their malicious behaviors, monitor malicious communications, as well as identify and notify targets.

[5] NIST. Computer Security Incident Handling Guide. Special Publication 800-61 Revision 2

If you like the site, please consider joining the telegram channel or supporting us on Patreon using the button below.

![[FLOCKER] - Ransomware Victim: G*********************y[.]org 5 image](https://www.redpacketsecurity.com/wp-content/uploads/2024/09/image-300x300.png)